#include <lstmtrainer.h>

Public Member Functions | |

| LSTMTrainer () | |

| LSTMTrainer (FileReader file_reader, FileWriter file_writer, CheckPointReader checkpoint_reader, CheckPointWriter checkpoint_writer, const char *model_base, const char *checkpoint_name, int debug_interval, inT64 max_memory) | |

| virtual | ~LSTMTrainer () |

| bool | TryLoadingCheckpoint (const char *filename) |

| void | InitCharSet (const UNICHARSET &unicharset, const STRING &script_dir, int train_flags) |

| void | InitCharSet (const UNICHARSET &unicharset, const UnicharCompress &recoder) |

| bool | InitNetwork (const STRING &network_spec, int append_index, int net_flags, float weight_range, float learning_rate, float momentum) |

| int | InitTensorFlowNetwork (const std::string &tf_proto) |

| void | InitIterations () |

| double | ActivationError () const |

| double | CharError () const |

| const double * | error_rates () const |

| double | best_error_rate () const |

| int | best_iteration () const |

| int | learning_iteration () const |

| int | improvement_steps () const |

| void | set_perfect_delay (int delay) |

| const GenericVector< char > & | best_trainer () const |

| double | NewSingleError (ErrorTypes type) const |

| double | LastSingleError (ErrorTypes type) const |

| const DocumentCache & | training_data () const |

| DocumentCache * | mutable_training_data () |

| Trainability | GridSearchDictParams (const ImageData *trainingdata, int iteration, double min_dict_ratio, double dict_ratio_step, double max_dict_ratio, double min_cert_offset, double cert_offset_step, double max_cert_offset, STRING *results) |

| void | SetSerializeMode (SerializeAmount serialize_amount) const |

| void | DebugNetwork () |

| bool | LoadAllTrainingData (const GenericVector< STRING > &filenames) |

| bool | MaintainCheckpoints (TestCallback tester, STRING *log_msg) |

| bool | MaintainCheckpointsSpecific (int iteration, const GenericVector< char > *train_model, const GenericVector< char > *rec_model, TestCallback tester, STRING *log_msg) |

| void | PrepareLogMsg (STRING *log_msg) const |

| void | LogIterations (const char *intro_str, STRING *log_msg) const |

| bool | TransitionTrainingStage (float error_threshold) |

| int | CurrentTrainingStage () const |

| virtual bool | Serialize (TFile *fp) const |

| virtual bool | DeSerialize (TFile *fp) |

| void | StartSubtrainer (STRING *log_msg) |

| SubTrainerResult | UpdateSubtrainer (STRING *log_msg) |

| void | ReduceLearningRates (LSTMTrainer *samples_trainer, STRING *log_msg) |

| int | ReduceLayerLearningRates (double factor, int num_samples, LSTMTrainer *samples_trainer) |

| bool | EncodeString (const STRING &str, GenericVector< int > *labels) const |

| void | ConvertToInt () |

| const ImageData * | TrainOnLine (LSTMTrainer *samples_trainer, bool batch) |

| Trainability | TrainOnLine (const ImageData *trainingdata, bool batch) |

| Trainability | PrepareForBackward (const ImageData *trainingdata, NetworkIO *fwd_outputs, NetworkIO *targets) |

| bool | SaveTrainingDump (SerializeAmount serialize_amount, const LSTMTrainer *trainer, GenericVector< char > *data) const |

| bool | ReadTrainingDump (const GenericVector< char > &data, LSTMTrainer *trainer) |

| bool | ReadSizedTrainingDump (const char *data, int size) |

| void | SetupCheckpointInfo () |

| void | SaveRecognitionDump (GenericVector< char > *data) const |

| bool | SaveBestModel (FileWriter writer) const |

| STRING | DumpFilename () const |

| void | FillErrorBuffer (double new_error, ErrorTypes type) |

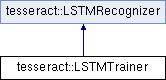

Public Member Functions inherited from tesseract::LSTMRecognizer Public Member Functions inherited from tesseract::LSTMRecognizer | |

| LSTMRecognizer () | |

| ~LSTMRecognizer () | |

| int | NumOutputs () const |

| int | training_iteration () const |

| int | sample_iteration () const |

| double | learning_rate () const |

| bool | IsHardening () const |

| LossType | OutputLossType () const |

| bool | SimpleTextOutput () const |

| bool | IsIntMode () const |

| bool | IsRecoding () const |

| CachingStrategy | CacheStrategy () const |

| bool | IsTensorFlow () const |

| GenericVector< STRING > | EnumerateLayers () const |

| Network * | GetLayer (const STRING &id) const |

| float | GetLayerLearningRate (const STRING &id) const |

| void | ScaleLearningRate (double factor) |

| void | ScaleLayerLearningRate (const STRING &id, double factor) |

| bool | IsUsingAdaGrad () const |

| const UNICHARSET & | GetUnicharset () const |

| const Dict * | GetDict () const |

| void | SetIteration (int iteration) |

| int | NumInputs () const |

| int | null_char () const |

| bool | Serialize (TFile *fp) const |

| bool | DeSerialize (TFile *fp) |

| bool | LoadDictionary (const char *lang, TessdataManager *mgr) |

| void | RecognizeLine (const ImageData &image_data, bool invert, bool debug, double worst_dict_cert, bool use_alternates, const UNICHARSET *target_unicharset, const TBOX &line_box, float score_ratio, bool one_word, PointerVector< WERD_RES > *words) |

| void | WordsFromOutputs (const NetworkIO &outputs, const GenericVector< int > &labels, const GenericVector< int > label_coords, const TBOX &line_box, bool debug, bool use_alternates, bool one_word, float score_ratio, float scale_factor, const UNICHARSET *target_unicharset, PointerVector< WERD_RES > *words) |

| void | OutputStats (const NetworkIO &outputs, float *min_output, float *mean_output, float *sd) |

| bool | RecognizeLine (const ImageData &image_data, bool invert, bool debug, bool re_invert, float label_threshold, float *scale_factor, NetworkIO *inputs, NetworkIO *outputs) |

| WERD_RES * | WordFromOutput (const TBOX &line_box, const NetworkIO &outputs, int word_start, int word_end, float score_ratio, float space_certainty, bool debug, bool use_alternates, const UNICHARSET *target_unicharset, const GenericVector< int > &labels, const GenericVector< int > &label_coords, float scale_factor) |

| WERD_RES * | InitializeWord (const TBOX &line_box, int word_start, int word_end, float space_certainty, bool use_alternates, const UNICHARSET *target_unicharset, const GenericVector< int > &labels, const GenericVector< int > &label_coords, float scale_factor) |

| STRING | DecodeLabels (const GenericVector< int > &labels) |

| void | DisplayForward (const NetworkIO &inputs, const GenericVector< int > &labels, const GenericVector< int > &label_coords, const char *window_name, ScrollView **window) |

Static Public Member Functions | |

| static bool | EncodeString (const STRING &str, const UNICHARSET &unicharset, const UnicharCompress *recoder, bool simple_text, int null_char, GenericVector< int > *labels) |

| static LSTMRecognizer * | ReadRecognitionDump (const GenericVector< char > &data) |

Protected Member Functions | |

| void | EmptyConstructor () |

| void | SetUnicharsetProperties (const STRING &script_dir) |

| bool | DebugLSTMTraining (const NetworkIO &inputs, const ImageData &trainingdata, const NetworkIO &fwd_outputs, const GenericVector< int > &truth_labels, const NetworkIO &outputs) |

| void | DisplayTargets (const NetworkIO &targets, const char *window_name, ScrollView **window) |

| bool | ComputeTextTargets (const NetworkIO &outputs, const GenericVector< int > &truth_labels, NetworkIO *targets) |

| bool | ComputeCTCTargets (const GenericVector< int > &truth_labels, NetworkIO *outputs, NetworkIO *targets) |

| double | ComputeErrorRates (const NetworkIO &deltas, double char_error, double word_error) |

| double | ComputeRMSError (const NetworkIO &deltas) |

| double | ComputeWinnerError (const NetworkIO &deltas) |

| double | ComputeCharError (const GenericVector< int > &truth_str, const GenericVector< int > &ocr_str) |

| double | ComputeWordError (STRING *truth_str, STRING *ocr_str) |

| void | UpdateErrorBuffer (double new_error, ErrorTypes type) |

| void | RollErrorBuffers () |

| STRING | UpdateErrorGraph (int iteration, double error_rate, const GenericVector< char > &model_data, TestCallback tester) |

Protected Member Functions inherited from tesseract::LSTMRecognizer Protected Member Functions inherited from tesseract::LSTMRecognizer | |

| void | SetRandomSeed () |

| void | DisplayLSTMOutput (const GenericVector< int > &labels, const GenericVector< int > &xcoords, int height, ScrollView *window) |

| void | DebugActivationPath (const NetworkIO &outputs, const GenericVector< int > &labels, const GenericVector< int > &xcoords) |

| void | DebugActivationRange (const NetworkIO &outputs, const char *label, int best_choice, int x_start, int x_end) |

| void | LabelsFromOutputs (const NetworkIO &outputs, float null_thr, GenericVector< int > *labels, GenericVector< int > *xcoords) |

| void | LabelsViaThreshold (const NetworkIO &output, float null_threshold, GenericVector< int > *labels, GenericVector< int > *xcoords) |

| void | LabelsViaCTC (const NetworkIO &output, GenericVector< int > *labels, GenericVector< int > *xcoords) |

| void | LabelsViaReEncode (const NetworkIO &output, GenericVector< int > *labels, GenericVector< int > *xcoords) |

| void | LabelsViaSimpleText (const NetworkIO &output, GenericVector< int > *labels, GenericVector< int > *xcoords) |

| BLOB_CHOICE_LIST * | GetBlobChoices (int col, int row, bool debug, const NetworkIO &output, const UNICHARSET *target_unicharset, int x_start, int x_end, float score_ratio) |

| bool | AddBlobChoices (int unichar_id, float rating, float certainty, int col, int row, const UNICHARSET *target_unicharset, BLOB_CHOICE_IT *bc_it) |

| const char * | DecodeLabel (const GenericVector< int > &labels, int start, int *end, int *decoded) |

| const char * | DecodeSingleLabel (int label) |

Static Protected Attributes | |

| static const int | kRollingBufferSize_ = 1000 |

Detailed Description

Definition at line 89 of file lstmtrainer.h.

Constructor & Destructor Documentation

◆ LSTMTrainer() [1/2]

| tesseract::LSTMTrainer::LSTMTrainer | ( | ) |

Definition at line 73 of file lstmtrainer.cpp.

◆ LSTMTrainer() [2/2]

| tesseract::LSTMTrainer::LSTMTrainer | ( | FileReader | file_reader, |

| FileWriter | file_writer, | ||

| CheckPointReader | checkpoint_reader, | ||

| CheckPointWriter | checkpoint_writer, | ||

| const char * | model_base, | ||

| const char * | checkpoint_name, | ||

| int | debug_interval, | ||

| inT64 | max_memory | ||

| ) |

Definition at line 86 of file lstmtrainer.cpp.

◆ ~LSTMTrainer()

|

virtual |

Definition at line 113 of file lstmtrainer.cpp.

Member Function Documentation

◆ ActivationError()

|

inline |

Definition at line 136 of file lstmtrainer.h.

◆ best_error_rate()

|

inline |

Definition at line 143 of file lstmtrainer.h.

◆ best_iteration()

|

inline |

Definition at line 146 of file lstmtrainer.h.

◆ best_trainer()

|

inline |

Definition at line 152 of file lstmtrainer.h.

◆ CharError()

|

inline |

Definition at line 139 of file lstmtrainer.h.

◆ ComputeCharError()

|

protected |

Definition at line 1186 of file lstmtrainer.cpp.

◆ ComputeCTCTargets()

|

protected |

Definition at line 1118 of file lstmtrainer.cpp.

◆ ComputeErrorRates()

|

protected |

Definition at line 1129 of file lstmtrainer.cpp.

◆ ComputeRMSError()

|

protected |

Definition at line 1149 of file lstmtrainer.cpp.

◆ ComputeTextTargets()

|

protected |

Definition at line 1098 of file lstmtrainer.cpp.

◆ ComputeWinnerError()

|

protected |

Definition at line 1168 of file lstmtrainer.cpp.

◆ ComputeWordError()

Definition at line 1214 of file lstmtrainer.cpp.

◆ ConvertToInt()

|

inline |

Definition at line 257 of file lstmtrainer.h.

◆ CurrentTrainingStage()

|

inline |

Definition at line 213 of file lstmtrainer.h.

◆ DebugLSTMTraining()

|

protected |

Definition at line 1028 of file lstmtrainer.cpp.

◆ DebugNetwork()

| void tesseract::LSTMTrainer::DebugNetwork | ( | ) |

Definition at line 298 of file lstmtrainer.cpp.

◆ DeSerialize()

|

virtual |

Definition at line 483 of file lstmtrainer.cpp.

◆ DisplayTargets()

|

protected |

Definition at line 1061 of file lstmtrainer.cpp.

◆ DumpFilename()

| STRING tesseract::LSTMTrainer::DumpFilename | ( | ) | const |

Definition at line 951 of file lstmtrainer.cpp.

◆ EmptyConstructor()

|

protected |

Definition at line 967 of file lstmtrainer.cpp.

◆ EncodeString() [1/2]

|

inline |

Definition at line 247 of file lstmtrainer.h.

◆ EncodeString() [2/2]

|

static |

Definition at line 748 of file lstmtrainer.cpp.

◆ error_rates()

|

inline |

Definition at line 140 of file lstmtrainer.h.

◆ FillErrorBuffer()

| void tesseract::LSTMTrainer::FillErrorBuffer | ( | double | new_error, |

| ErrorTypes | type | ||

| ) |

Definition at line 960 of file lstmtrainer.cpp.

◆ GridSearchDictParams()

| Trainability tesseract::LSTMTrainer::GridSearchDictParams | ( | const ImageData * | trainingdata, |

| int | iteration, | ||

| double | min_dict_ratio, | ||

| double | dict_ratio_step, | ||

| double | max_dict_ratio, | ||

| double | min_cert_offset, | ||

| double | cert_offset_step, | ||

| double | max_cert_offset, | ||

| STRING * | results | ||

| ) |

Definition at line 248 of file lstmtrainer.cpp.

◆ improvement_steps()

|

inline |

Definition at line 150 of file lstmtrainer.h.

◆ InitCharSet() [1/2]

| void tesseract::LSTMTrainer::InitCharSet | ( | const UNICHARSET & | unicharset, |

| const STRING & | script_dir, | ||

| int | train_flags | ||

| ) |

Definition at line 138 of file lstmtrainer.cpp.

◆ InitCharSet() [2/2]

| void tesseract::LSTMTrainer::InitCharSet | ( | const UNICHARSET & | unicharset, |

| const UnicharCompress & | recoder | ||

| ) |

Definition at line 152 of file lstmtrainer.cpp.

◆ InitIterations()

| void tesseract::LSTMTrainer::InitIterations | ( | ) |

Definition at line 223 of file lstmtrainer.cpp.

◆ InitNetwork()

| bool tesseract::LSTMTrainer::InitNetwork | ( | const STRING & | network_spec, |

| int | append_index, | ||

| int | net_flags, | ||

| float | weight_range, | ||

| float | learning_rate, | ||

| float | momentum | ||

| ) |

Definition at line 175 of file lstmtrainer.cpp.

◆ InitTensorFlowNetwork()

| int tesseract::LSTMTrainer::InitTensorFlowNetwork | ( | const std::string & | tf_proto | ) |

Definition at line 203 of file lstmtrainer.cpp.

◆ LastSingleError()

|

inline |

Definition at line 160 of file lstmtrainer.h.

◆ learning_iteration()

|

inline |

Definition at line 149 of file lstmtrainer.h.

◆ LoadAllTrainingData()

| bool tesseract::LSTMTrainer::LoadAllTrainingData | ( | const GenericVector< STRING > & | filenames | ) |

Definition at line 305 of file lstmtrainer.cpp.

◆ LogIterations()

| void tesseract::LSTMTrainer::LogIterations | ( | const char * | intro_str, |

| STRING * | log_msg | ||

| ) | const |

Definition at line 414 of file lstmtrainer.cpp.

◆ MaintainCheckpoints()

| bool tesseract::LSTMTrainer::MaintainCheckpoints | ( | TestCallback | tester, |

| STRING * | log_msg | ||

| ) |

Definition at line 314 of file lstmtrainer.cpp.

◆ MaintainCheckpointsSpecific()

| bool tesseract::LSTMTrainer::MaintainCheckpointsSpecific | ( | int | iteration, |

| const GenericVector< char > * | train_model, | ||

| const GenericVector< char > * | rec_model, | ||

| TestCallback | tester, | ||

| STRING * | log_msg | ||

| ) |

◆ mutable_training_data()

|

inline |

Definition at line 168 of file lstmtrainer.h.

◆ NewSingleError()

|

inline |

Definition at line 154 of file lstmtrainer.h.

◆ PrepareForBackward()

| Trainability tesseract::LSTMTrainer::PrepareForBackward | ( | const ImageData * | trainingdata, |

| NetworkIO * | fwd_outputs, | ||

| NetworkIO * | targets | ||

| ) |

Definition at line 827 of file lstmtrainer.cpp.

◆ PrepareLogMsg()

| void tesseract::LSTMTrainer::PrepareLogMsg | ( | STRING * | log_msg | ) | const |

Definition at line 402 of file lstmtrainer.cpp.

◆ ReadRecognitionDump()

|

static |

Definition at line 940 of file lstmtrainer.cpp.

◆ ReadSizedTrainingDump()

| bool tesseract::LSTMTrainer::ReadSizedTrainingDump | ( | const char * | data, |

| int | size | ||

| ) |

Definition at line 924 of file lstmtrainer.cpp.

◆ ReadTrainingDump()

| bool tesseract::LSTMTrainer::ReadTrainingDump | ( | const GenericVector< char > & | data, |

| LSTMTrainer * | trainer | ||

| ) |

Definition at line 919 of file lstmtrainer.cpp.

◆ ReduceLayerLearningRates()

| int tesseract::LSTMTrainer::ReduceLayerLearningRates | ( | double | factor, |

| int | num_samples, | ||

| LSTMTrainer * | samples_trainer | ||

| ) |

Definition at line 639 of file lstmtrainer.cpp.

◆ ReduceLearningRates()

| void tesseract::LSTMTrainer::ReduceLearningRates | ( | LSTMTrainer * | samples_trainer, |

| STRING * | log_msg | ||

| ) |

Definition at line 620 of file lstmtrainer.cpp.

◆ RollErrorBuffers()

|

protected |

Definition at line 1260 of file lstmtrainer.cpp.

◆ SaveBestModel()

| bool tesseract::LSTMTrainer::SaveBestModel | ( | FileWriter | writer | ) | const |

◆ SaveRecognitionDump()

| void tesseract::LSTMTrainer::SaveRecognitionDump | ( | GenericVector< char > * | data | ) | const |

Definition at line 931 of file lstmtrainer.cpp.

◆ SaveTrainingDump()

| bool tesseract::LSTMTrainer::SaveTrainingDump | ( | SerializeAmount | serialize_amount, |

| const LSTMTrainer * | trainer, | ||

| GenericVector< char > * | data | ||

| ) | const |

Definition at line 909 of file lstmtrainer.cpp.

◆ Serialize()

|

virtual |

Definition at line 433 of file lstmtrainer.cpp.

◆ set_perfect_delay()

|

inline |

Definition at line 151 of file lstmtrainer.h.

◆ SetSerializeMode()

|

inline |

Definition at line 178 of file lstmtrainer.h.

◆ SetUnicharsetProperties()

|

protected |

Definition at line 982 of file lstmtrainer.cpp.

◆ SetupCheckpointInfo()

| void tesseract::LSTMTrainer::SetupCheckpointInfo | ( | ) |

◆ StartSubtrainer()

| void tesseract::LSTMTrainer::StartSubtrainer | ( | STRING * | log_msg | ) |

Definition at line 547 of file lstmtrainer.cpp.

◆ training_data()

|

inline |

Definition at line 165 of file lstmtrainer.h.

◆ TrainOnLine() [1/2]

|

inline |

Definition at line 268 of file lstmtrainer.h.

◆ TrainOnLine() [2/2]

| Trainability tesseract::LSTMTrainer::TrainOnLine | ( | const ImageData * | trainingdata, |

| bool | batch | ||

| ) |

Definition at line 794 of file lstmtrainer.cpp.

◆ TransitionTrainingStage()

| bool tesseract::LSTMTrainer::TransitionTrainingStage | ( | float | error_threshold | ) |

Definition at line 423 of file lstmtrainer.cpp.

◆ TryLoadingCheckpoint()

| bool tesseract::LSTMTrainer::TryLoadingCheckpoint | ( | const char * | filename | ) |

Definition at line 125 of file lstmtrainer.cpp.

◆ UpdateErrorBuffer()

|

protected |

Definition at line 1247 of file lstmtrainer.cpp.

◆ UpdateErrorGraph()

|

protected |

Definition at line 1279 of file lstmtrainer.cpp.

◆ UpdateSubtrainer()

| SubTrainerResult tesseract::LSTMTrainer::UpdateSubtrainer | ( | STRING * | log_msg | ) |

Definition at line 577 of file lstmtrainer.cpp.

Member Data Documentation

◆ align_win_

|

protected |

Definition at line 390 of file lstmtrainer.h.

◆ best_error_history_

|

protected |

Definition at line 451 of file lstmtrainer.h.

◆ best_error_iterations_

|

protected |

Definition at line 452 of file lstmtrainer.h.

◆ best_error_rate_

|

protected |

Definition at line 424 of file lstmtrainer.h.

◆ best_error_rates_

|

protected |

Definition at line 426 of file lstmtrainer.h.

◆ best_iteration_

|

protected |

Definition at line 428 of file lstmtrainer.h.

◆ best_model_data_

|

protected |

Definition at line 438 of file lstmtrainer.h.

◆ best_model_name_

|

protected |

Definition at line 410 of file lstmtrainer.h.

◆ best_trainer_

|

protected |

Definition at line 441 of file lstmtrainer.h.

◆ checkpoint_iteration_

|

protected |

Definition at line 400 of file lstmtrainer.h.

◆ checkpoint_name_

|

protected |

Definition at line 404 of file lstmtrainer.h.

◆ checkpoint_reader_

|

protected |

Definition at line 418 of file lstmtrainer.h.

◆ checkpoint_writer_

|

protected |

Definition at line 419 of file lstmtrainer.h.

◆ ctc_win_

|

protected |

Definition at line 394 of file lstmtrainer.h.

◆ debug_interval_

|

protected |

Definition at line 398 of file lstmtrainer.h.

◆ error_buffers_

|

protected |

Definition at line 473 of file lstmtrainer.h.

◆ error_rate_of_last_saved_best_

|

protected |

Definition at line 446 of file lstmtrainer.h.

◆ error_rates_

|

protected |

Definition at line 475 of file lstmtrainer.h.

◆ file_reader_

|

protected |

Definition at line 414 of file lstmtrainer.h.

◆ file_writer_

|

protected |

Definition at line 415 of file lstmtrainer.h.

◆ improvement_steps_

|

protected |

Definition at line 454 of file lstmtrainer.h.

◆ kRollingBufferSize_

|

staticprotected |

Definition at line 472 of file lstmtrainer.h.

◆ last_perfect_training_iteration_

|

protected |

Definition at line 469 of file lstmtrainer.h.

◆ learning_iteration_

|

protected |

Definition at line 458 of file lstmtrainer.h.

◆ model_base_

|

protected |

Definition at line 402 of file lstmtrainer.h.

◆ num_training_stages_

|

protected |

Definition at line 412 of file lstmtrainer.h.

◆ perfect_delay_

|

protected |

Definition at line 466 of file lstmtrainer.h.

◆ prev_sample_iteration_

|

protected |

Definition at line 460 of file lstmtrainer.h.

◆ recon_win_

|

protected |

Definition at line 396 of file lstmtrainer.h.

◆ serialize_amount_

|

mutableprotected |

Definition at line 408 of file lstmtrainer.h.

◆ stall_iteration_

|

protected |

Definition at line 436 of file lstmtrainer.h.

◆ sub_trainer_

|

protected |

Definition at line 444 of file lstmtrainer.h.

◆ target_win_

|

protected |

Definition at line 392 of file lstmtrainer.h.

◆ training_data_

|

protected |

Definition at line 406 of file lstmtrainer.h.

◆ training_stage_

|

protected |

Definition at line 448 of file lstmtrainer.h.

◆ worst_error_rate_

|

protected |

Definition at line 430 of file lstmtrainer.h.

◆ worst_error_rates_

|

protected |

Definition at line 432 of file lstmtrainer.h.

◆ worst_iteration_

|

protected |

Definition at line 434 of file lstmtrainer.h.

◆ worst_model_data_

|

protected |

Definition at line 439 of file lstmtrainer.h.

The documentation for this class was generated from the following files:

- /home/stefan/src/github/tesseract-ocr/tesseract/lstm/lstmtrainer.h

- /home/stefan/src/github/tesseract-ocr/tesseract/lstm/lstmtrainer.cpp