Poster

- Title: RaiseWikibase: Fast inserts into the BERD instance

- Authors: Renat Shigapov, Jörg Mechnich & Irene Schumm

- Abstract: The open-source tool RaiseWikibase for fast data import and knowledge graph construction with Wikibase is presented.

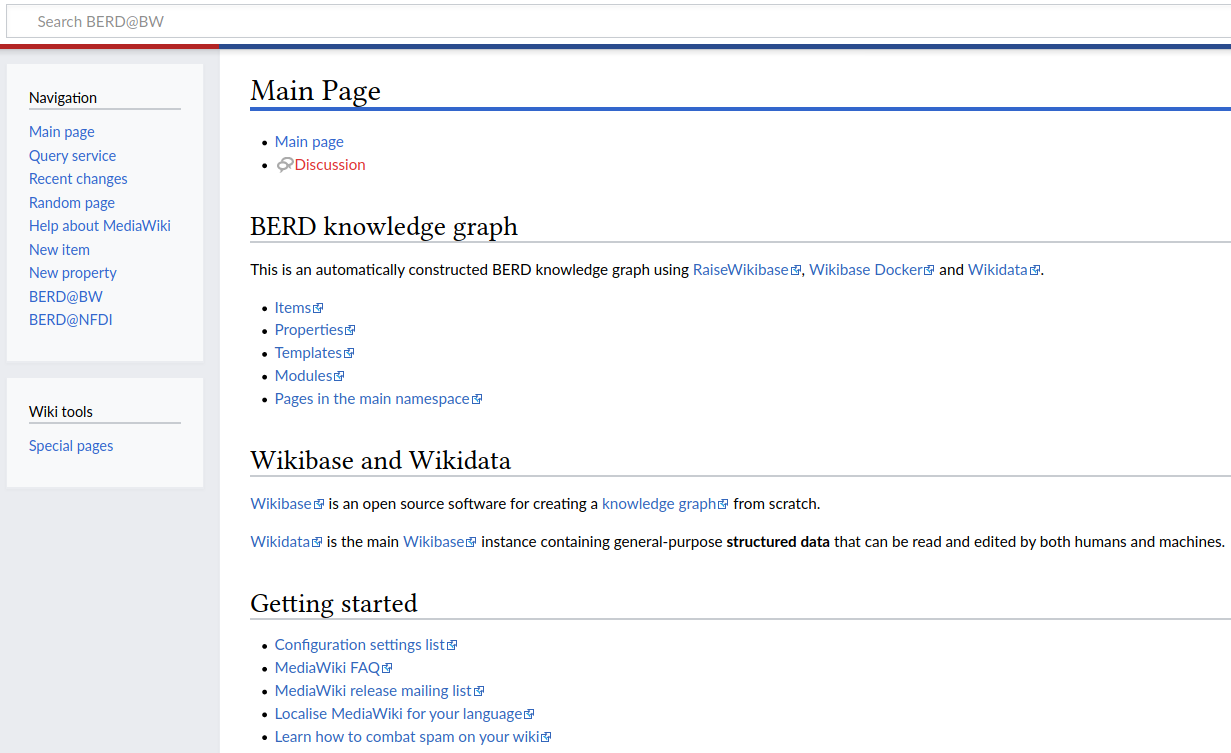

- BERD@BW: Business & Economics Research Data Center Baden-Württemberg

Motivation

- German company data is fragmented over providers, registers & time spans.

- To handle with that, we create the BERD knowledge graph for German company data.

- Wikibase is chosen for implementation, but data import via the Wikibase API is a bit slow:

| the Wikibase frontend | the Wikibase API | MariaDB |

|---|---|---|

| manual page creation | WikidataIntegrator | wikibase-insert |

| WikibaseIntegrator | RaiseWikibase | |

| wikibase-cli | ||

| WikidataToolkit | ||

| Pywikibot | ||

| QuickStatements | ||

| less than 1 page per second | 1-6 pages per second | 100-300 pages per second |

RaiseWikibase

- Open-source Python tool.

- Adapted to Wikibase Docker Image “1.35”.

- Connects to MariaDB via the mysqlclient library.

Main functions

The page function executes inserts into the database but does not commit them:

from RaiseWikibase.dbconnection import DBConnection

from RaiseWikibase.raiser import page

connection = DBConnection()

page(connection=connection, content_model=content_model,

namespace=namespace, text=text, page_title=page_title, new=True)

connection.conn.commit()

connection.conn.close()

Multiple page functions are wrapped into a transaction inside the batch function:

from RaiseWikibase.raiser import batch

batch(content_model='wikibase-item', texts=[item for i in range(1000)])

where item is the JSON representation of an item created using the entity function.

Performance

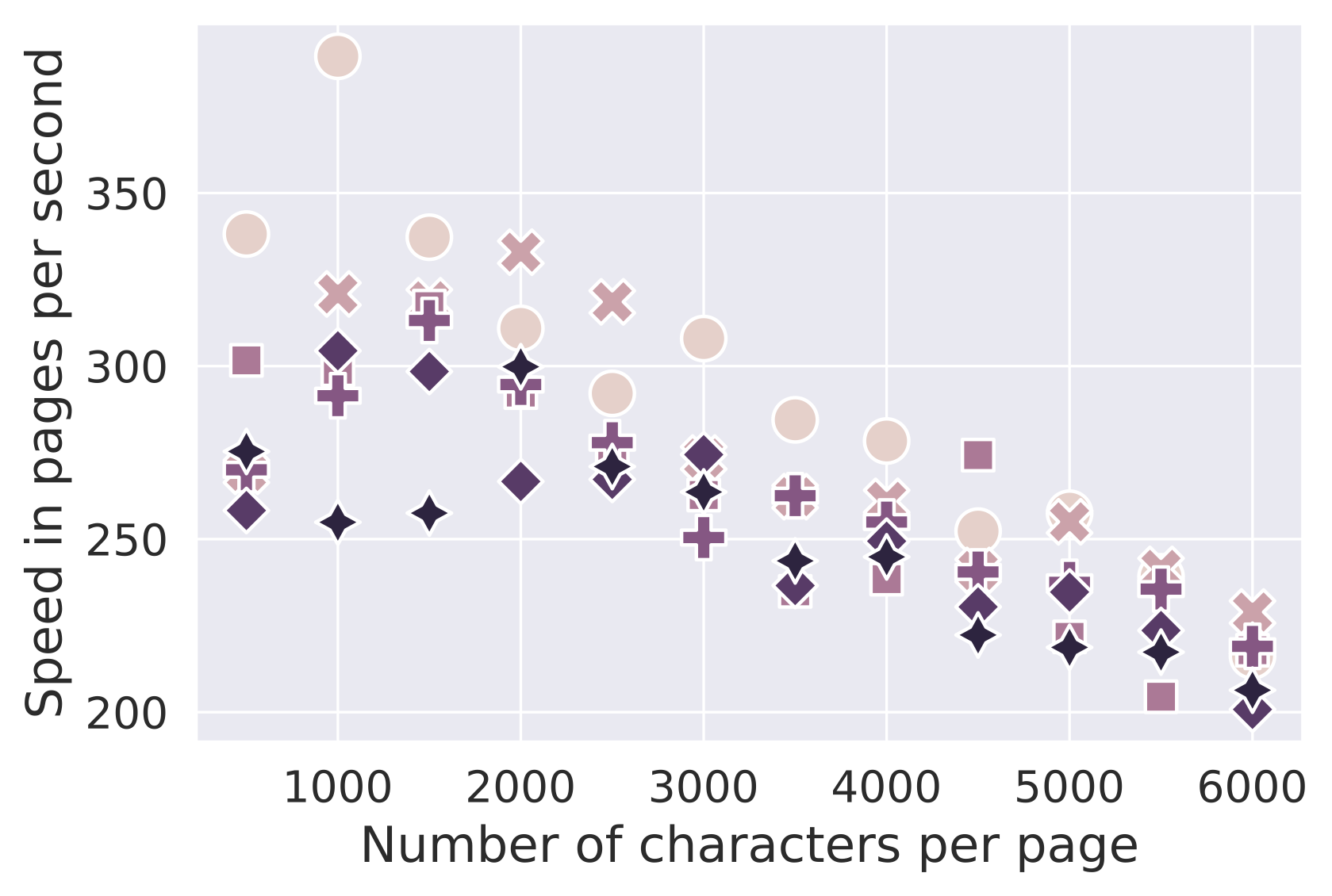

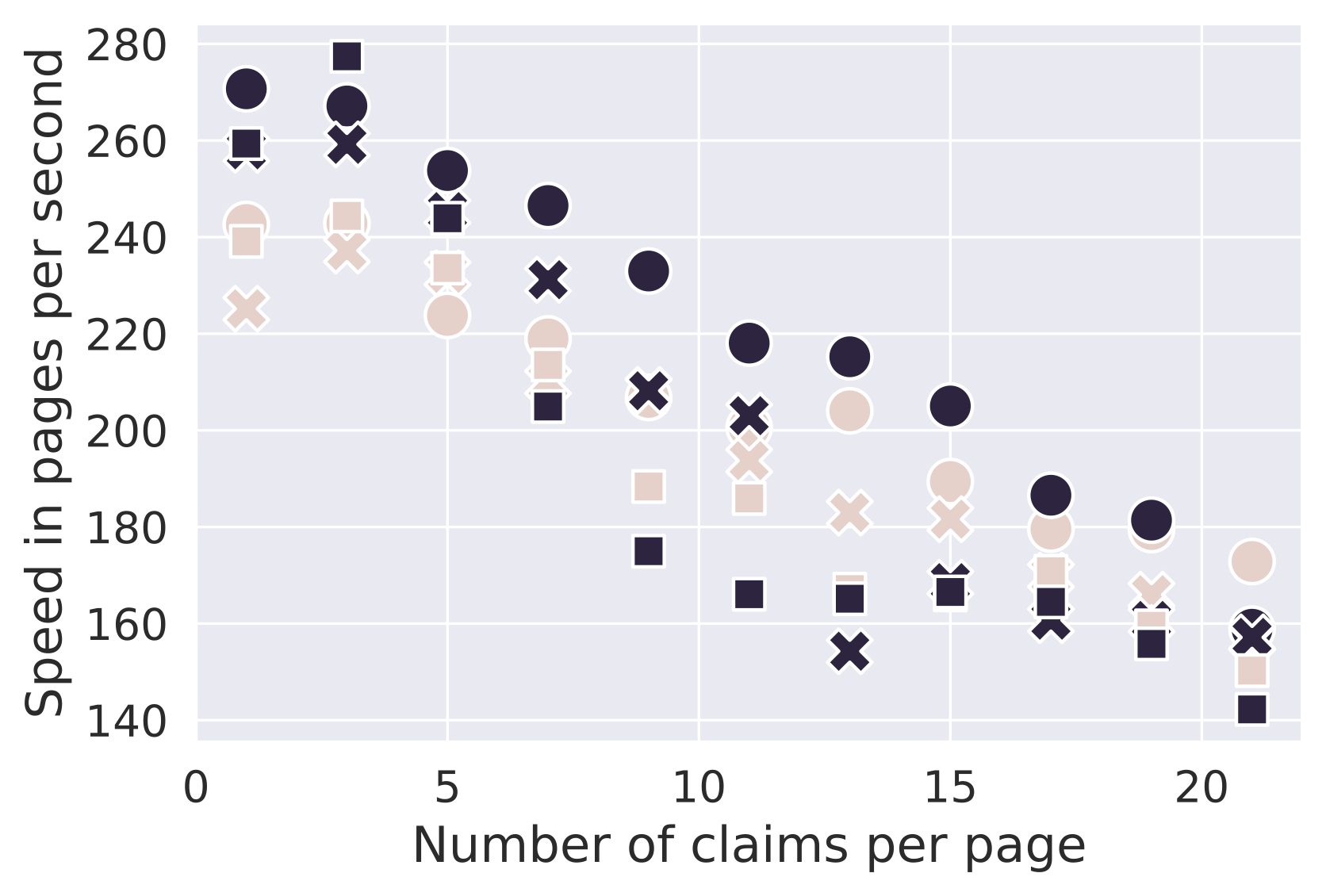

- The insert rate decreases approximately linearly with increasing number of characters per wikitext and with increasing number of claims per item.

- Small pages are uploaded at rates of 250-350 wikitexts per second and 220-280 items per second.

| Wikitexts | Items |

|---|---|

|  |

Configuration

- The folder texts contains templates, modules and other unstructured data.

- Modify them and add your own files to texts.

- Run the function fill_texts.

Federated properties

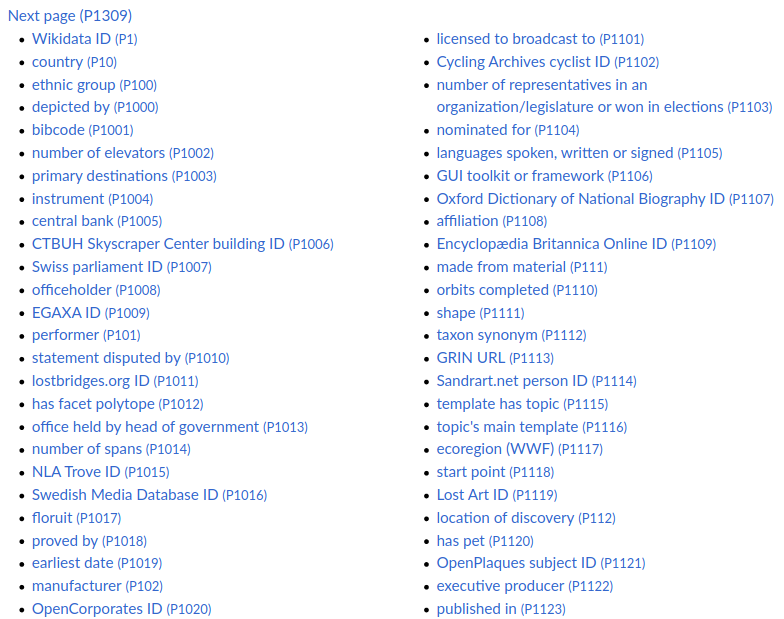

- Federated properties are under development in Wikimedia Germany.

- Therefore, run the script miniWikibase.py.

- It creates all properties from Wikidata in a local Wikibase instance.

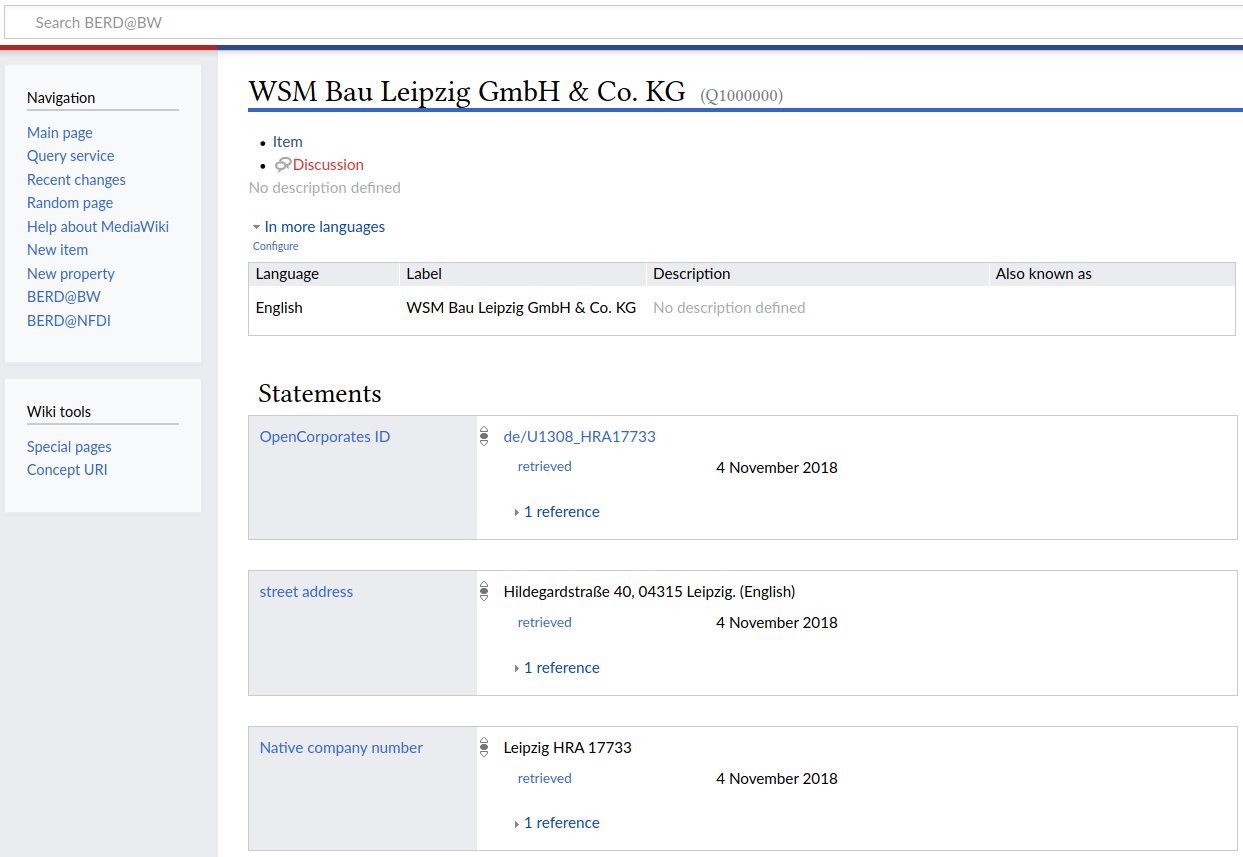

Creating a knowledge graph

- Download OffeneRegister dataset donated to OKFD by OpenCorporates.

- Semantic annotator bbw annotates the tables automatically using Wikidata.

- Run megaWikibase.py.

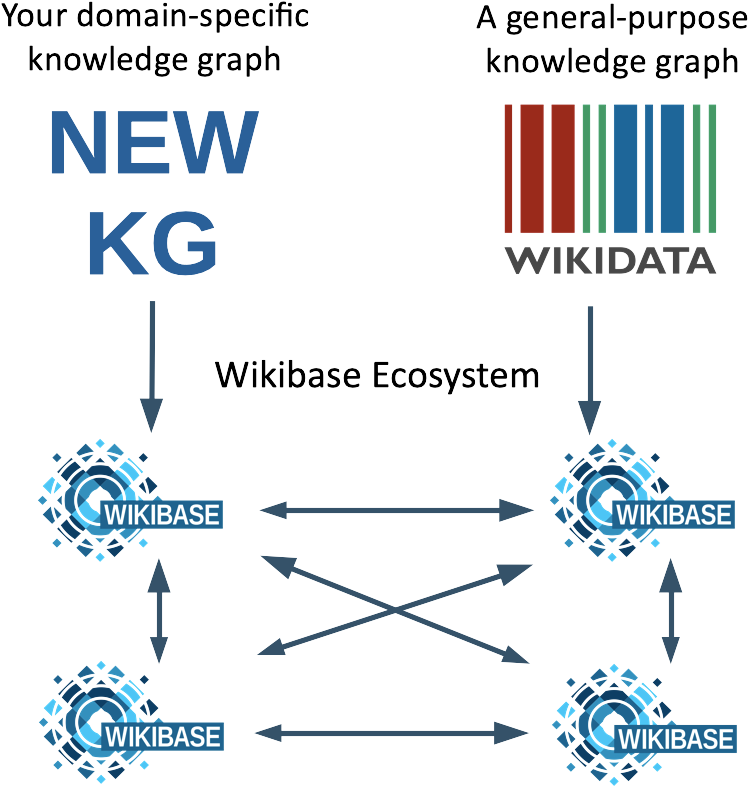

Conclusions

| 1. Clone RaiseWikibase and adapt the files for your use case. 2. Build fast your own Wikibase knowledge graph. 3. Join the Wikibase Ecosystem. |  |